TABLE OF CONTENTS

Experience the Future of Speech Recognition Today

Try Vatis now, no credit card required.

.avif)

Introduction: Why Speaker Diarization Matters

What is Speaker Diarization?

Speaker diarization is the process of breaking down an audio file into segments and determining which speaker corresponds to each segment. It answers the question, "Who spoke when?" This is crucial for applications such as transcription, media monitoring, and conversational AI.

For example, in a political debate or business meeting, speaker diarization helps differentiate speakers, ensuring accurate attribution of speech during transcription. This makes it easier to search and analyze specific topics without manually reviewing the entire recording.

From a machine learning perspective, speaker diarization uses various approaches to achieve this segmentation. This blog will explore three common methods to help you choose the right one for your needs.

Method 1: Pipeline-Based Approach

One widely-used approach to speaker diarization is a multi-step process that combines voice activity detection, segment encoding, and clustering. This is exemplified by frameworks like pyannote.

Steps in the Pipeline Approach

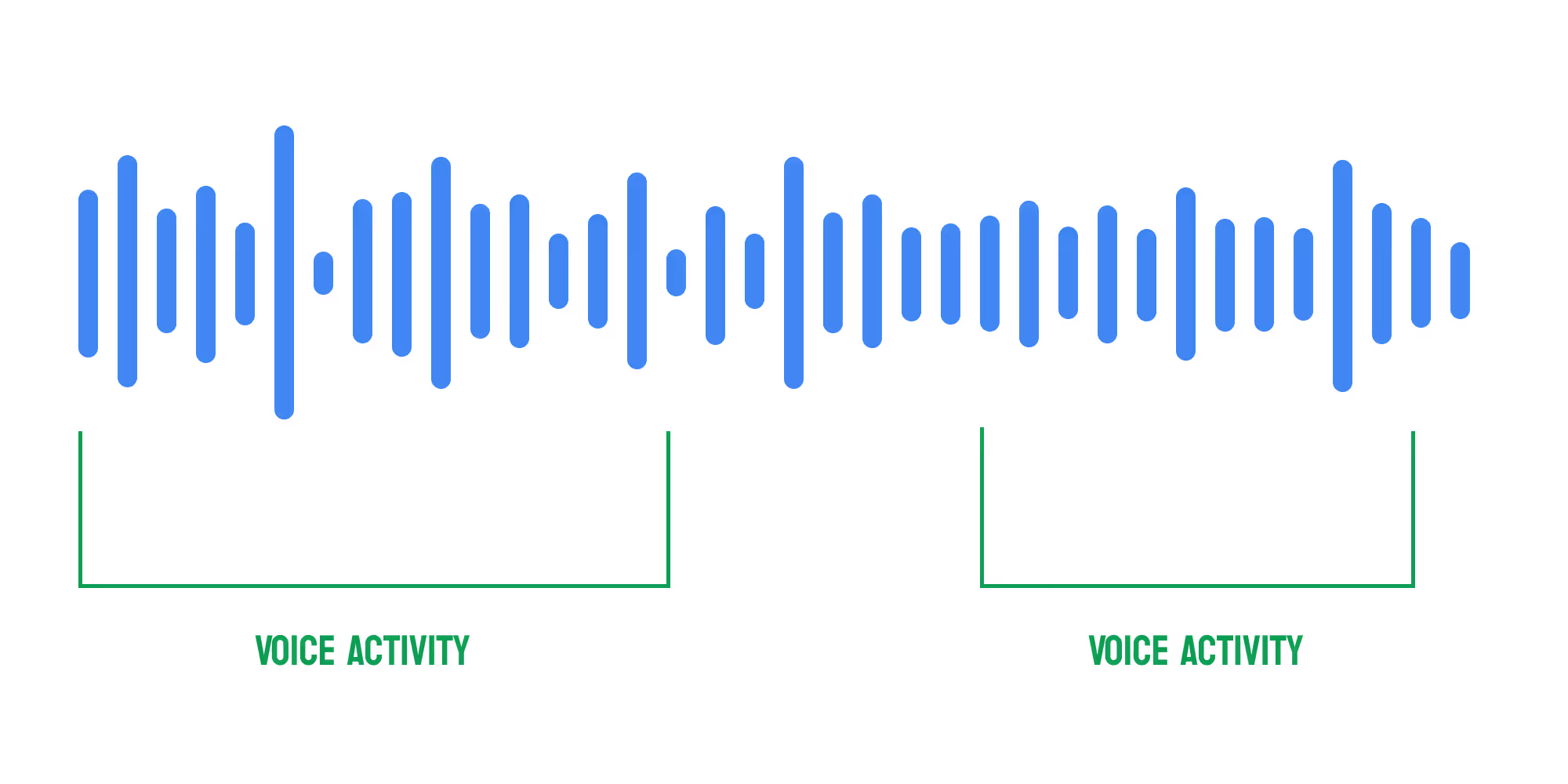

1. Voice Activity Detection

A neural network identifies distinct segments of speech within an audio file, separating speech from silence or noise.

2. Segment Encoding

Two additional neural networks refine these segments—one detects speaker changes, while another identifies overlapping speech. A fourth neural network encodes these segments into mathematical vectors, with similar vectors representing the same speaker.

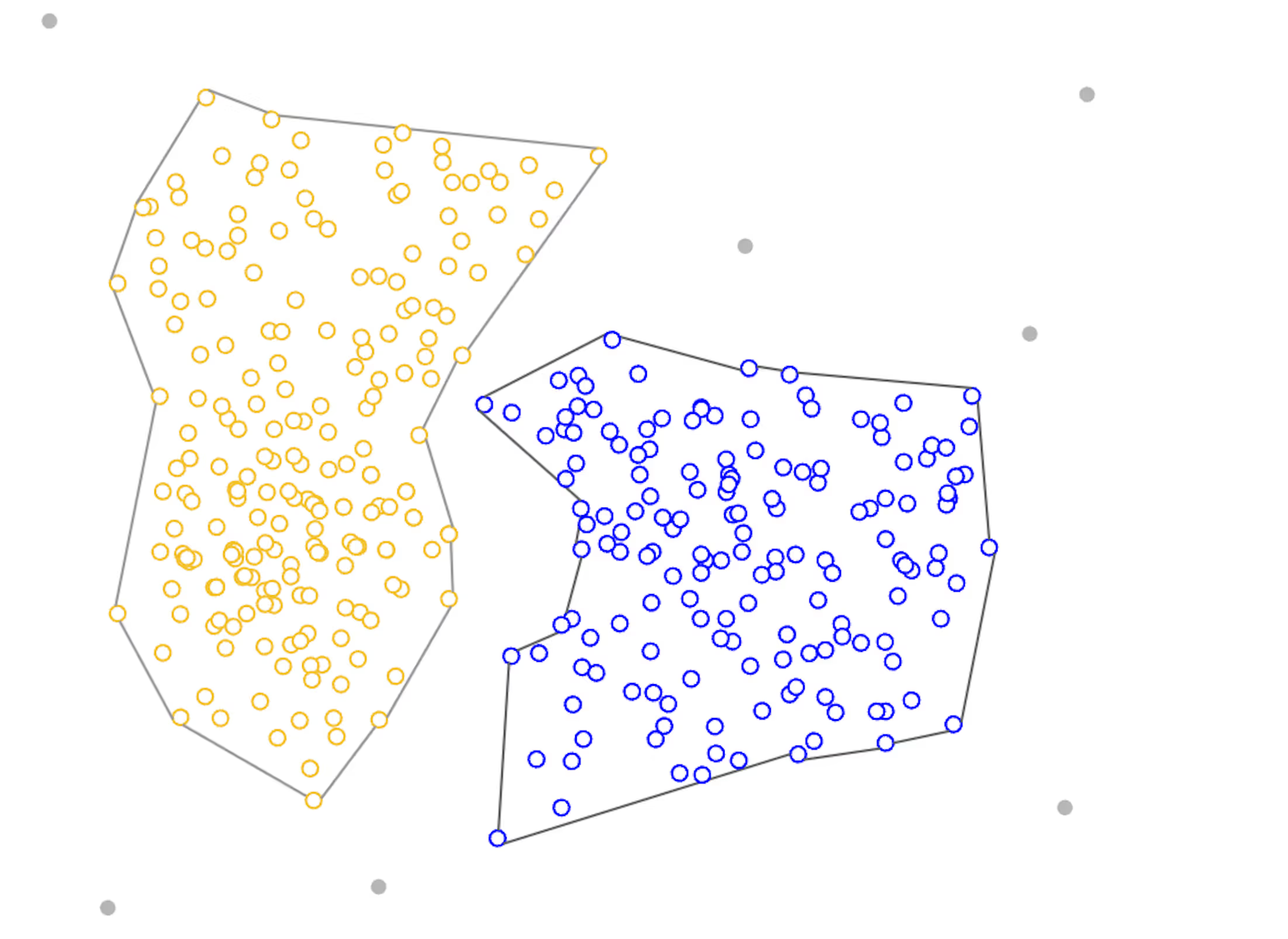

3. Clustering

The encoded segments are then processed using a density-based clustering algorithm. This technique plots the encodings for each segment and groups them based on a similarity function, effectively attributing segments to the correct speaker.

.avif)

Key Features

- Structured, step-by-step process.

- Allows for fine-tuning at each stage.

- Effective when the number of speakers is predefined.

Method 2: End-to-End Transformer Model

Another approach is using an end-to-end transformer model, such as the one described in this research paper. This method employs a single neural network that directly processes the entire audio input to produce speaker labels.

Key Features of End-to-End Transformer Model

1. Audio Analysis

The model processes audio frame-by-frame (e.g., every 20ms), identifying if a specific speaker is active during each frame. This is done by converting the audio input into a log-mel spectrogram, which the model uses to learn the relationships between different segments.

.avif)

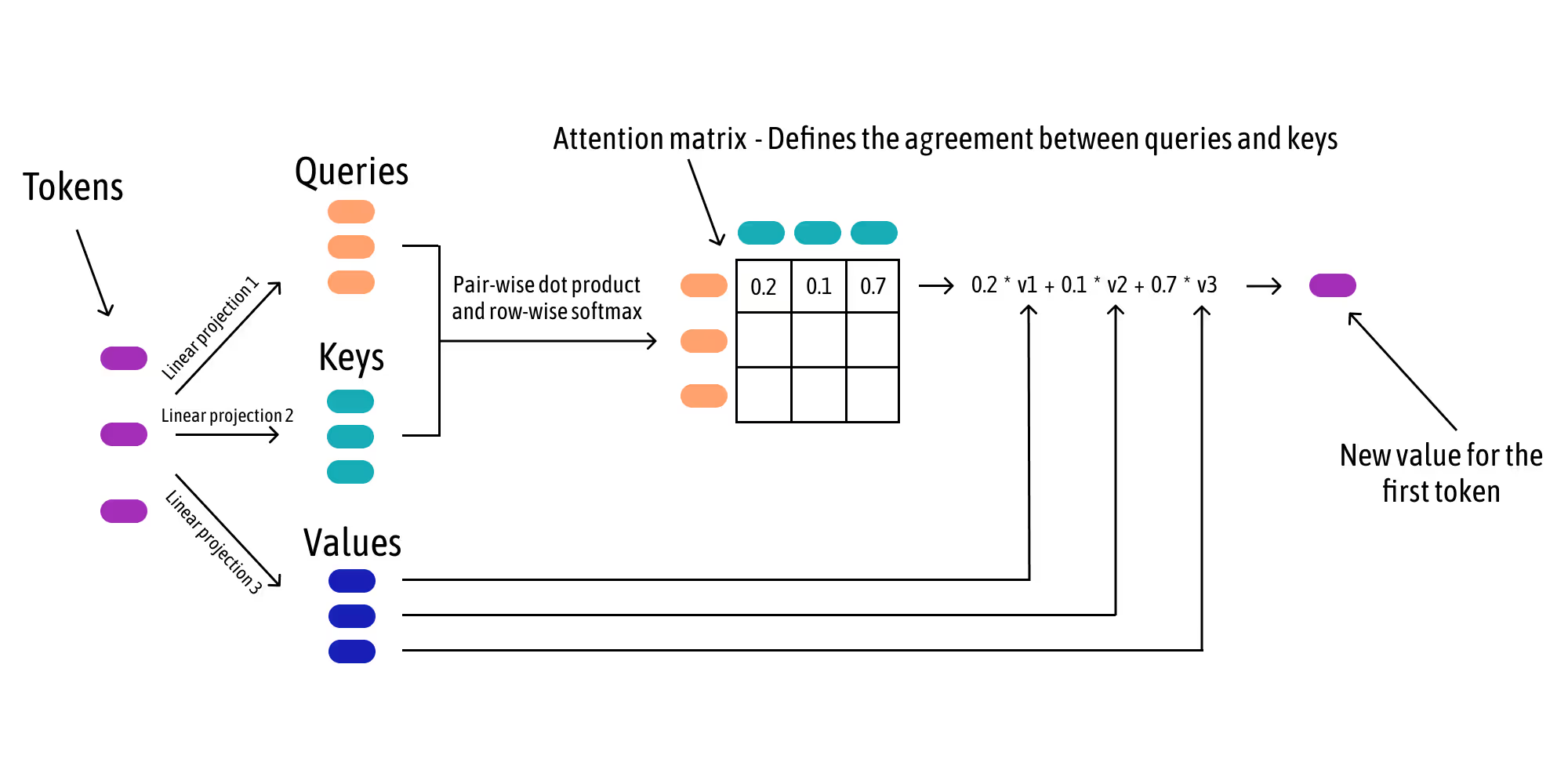

2. Attention Mechanisms

Models like OpenAI’s Whisper (source) use attention mechanisms to identify relationships between different audio segments. The model creates an internal representation (a mathematical vector) for each time frame, grouping similar tokens together while keeping different ones apart.

In attention, each token generates a query, a key, and a value. The query represents what the token is looking for, the key represents what the token has to offer, and the value is what the token contributes towards building the vocal fingerprints. An attention matrix is created, measuring how similar each token is to others. Each token is then transformed into a weighted sum of similar tokens. This process groups similar tokens together and separates different ones. The transformer model efficiently groups time frames with similar vocal traits, which helps achieve accurate speaker diarization.

Key Features:

- Efficiently processes sequential data with a single model.

- Uses attention mechanisms to capture complex relationships.

- Directly optimized for speaker diarization.

Method 3: Advanced Clustering Techniques

Modern diarization systems employ advanced clustering techniques using state-of-the-art machine learning algorithms to enhance flexibility and adaptability.

Key Features of Clustering Techniques

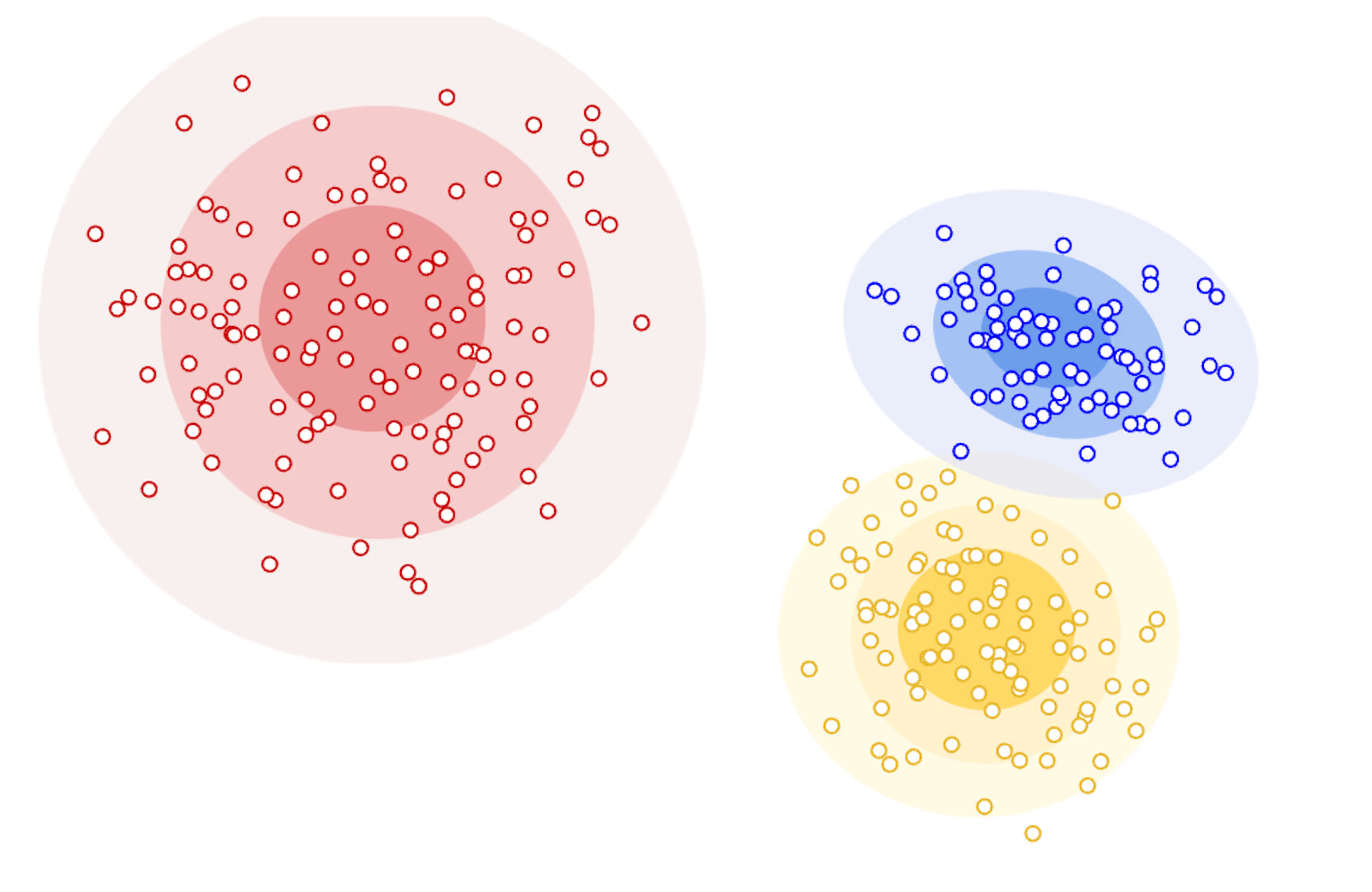

1. Model-Based Clustering (e.g., GMM)

Assumes a specific data distribution (like Gaussian) and requires a predefined number of clusters. It models overlapping data and handles multiple distributions effectively, useful when the characteristics of the data are known.

2. Density-Based Clustering (e.g., DBSCAN)

Does not require a predefined number of clusters and adapts to the natural grouping in the data. It handles clusters of varying shapes and densities, managing noise and outliers effectively.

Key Features:

- Maximum flexibility and adaptability.

- Suitable for dynamic scenarios with unknown numbers of speakers.

- Handles noise and diverse data structures well.

Choosing the Right Speaker Diarization System

Selecting the best speaker diarization method depends on your specific application and the characteristics of your data.

Summary of Methods

Here is a summary of the pros and cons of each method:

Method 1: Pipeline-Based Approach

- Advantages: Effective in scenarios where the data follows a known distribution or structure.Provides clear, sequential steps for voice activity detection, segment encoding, and clustering, which can be fine-tuned at each stage.

- Drawbacks: Less flexible due to its multi-step nature, requiring predefined assumptions (like the number of clusters). Can struggle with real-world scenarios where the number of speakers is unknown or dynamically changing.

Method 2: End-to-End Deep Learning Approach

- Advantages: Highly efficient for processing sequential data using a single model, with direct optimization for the end goal. Handles large-scale data and complex relationships between audio segments with attention mechanisms.

- Drawbacks: Requires a large amount of labeled training data, which may not always be available. Less adaptable to scenarios with unknown numbers of speakers or changing environments, and may require frequent retraining to perform well.

Method 3: Advanced Clustering Techniques

- Advantages: Offers maximum flexibility and adaptability, suitable for complex, real-world scenarios where the number of speakers or clusters is unknown. Does not require assumptions about data distribution or cluster numbers, making it ideal for unstructured or unpredictable environments.

- Drawbacks: Complex to implement and requires a deeper understanding of algorithms. Performance may vary based on clustering parameters.

How to Choose the Best Model

1. Understand Your Data Characteristics

- If your data is well-structured and you can define the number of speakers, consider Method 1.

- For large amounts of labeled data and complex audio relationships, Method 2 may be most effective.

- If flexibility and adaptability are needed, especially in unpredictable environments, choose Method 3.

2. Evaluate Your Application Needs

- For high flexibility and robustness against noise, go with Method 3.

- For large-scale applications needing efficiency, consider Method 2.

3. Assess Your Resources

- Choose Method 1 or 2 for simpler cases with limited data or resources.

- Opt for Method 3 if you have the expertise and computational capacity.

4. Consider Scalability

- If scalability is crucial, Method 2 offers the most efficient processing.

Final Thoughts

This blog provides a foundation for understanding speaker diarization and the different methods available. By evaluating your data characteristics, application needs, and resources, you can select the best solution, whether it's for monitoring debates, transcribing meetings, or analyzing large-scale audio recordings.

.avif)